Tutoriumstag

Am 08.11.2016 finden in den Räumlichkeiten des Institutes für Deutsche Sprache (Standort R5) vier Tutorien statt. Alle Tutorien sind

kostenlos und richten sich sowohl an die regulären Konferenzteilnehmer als auch - sofern freie Plätze vorhanden - an alle Interessierten am und außerhalb des IDS.

Zur Anmeldung für ein Tutorium klicken Sie bitte auf den " Anmelden"-Button.

Pragramm im Überblick

| TITEL | LEITER | SPRACHE | RAUM | ||

|---|---|---|---|---|---|

| 09:00 - 10:30 |

Working with Web Corpora (Teil 1) |

Felix Bildhauer und Roland Schäfer (Mannheim / Berlin) | Englisch | Vortragssaal |

Anmelden |

| 10:30 - 10:45 |

KAFFEEPAUSE | ||||

| 10:45 - 12:15 |

Working with Web Corpora (Teil 2) | Felix Bildhauer und Roland Schäfer (Mannheim / Berlin) | Englisch | Vortragssaal | |

| 12:15 - 14:00 |

MITTAGSPAUSE | ||||

| 14:00 - 15:30 |

InterCorp: Exploring a Multilingual Parallel Corpus (Abstract) Präsentation |

Alexandr Rosen (Prag) |

Englisch | Vortragssaal |

Anmelden |

| PARALLEL | |||||

| Introduction to Corpus Analysis with KorAP | Nils Diewald und Eliza Margaretha (Mannheim) | Englisch / Deutsch | Raum 1.28 |

Anmelden | |

| 15:30 - 15:45 |

KAFFEEPAUSE | ||||

| 15:45 - 17:15 |

Visualisierung linguistischer Daten mit der freien Grafik- und Statistikumgebung R (Teil 1) |

Sandra Hansen-Morath und Sascha Wolfer (Mannheim) |

Englisch / Deutsch | Vortragssaal |

Anmelden |

| 17:15 - 17:30 |

KAFFEEPAUSE | ||||

| 17:30 - 19:00 |

Visualisierung linguistischer Daten mit der freien Grafik- und Statistikumgebung R (Teil 2) | Sandra Hansen-Morath und Sascha Wolfer (Mannheim) | Englisch / Deutsch | Vortragssaal | |

| 20:00 |

GET-TOGETHER in Wirtshaus UHLAND! | Anmelden | |||

Working with Web Corpora

Felix Bildhauer und Roland Schäfer (Mannheim / Berlin)

Web corpora (huge, post-processed collections of web pages) provide an increasingly important source of data for linguistic research, thanks to their size, content, and availability. The last decade has seen important developments in the construction of web corpora, and the current generation surpasses its predecessors in cleanliness, level and quality of linguistic annotation and enrichment with meta data. At the same time, web corpora have peculiarities (such as sampling biases, duplication, non-standard orthography and language, lack of some meta data) that may discourage linguists from using them. Linguists working with web corpora should at all times be aware of these limitations.

This workshop will start with a brief introduction to the making of web corpora, discussing some of the most important questions of design and processing, including linguistic annotation. The main focus of the workshop, however, is on practical questions that frequently arise from a linguist's perspective. In particular, we will discuss what web corpora can (and cannot) do for linguists in their daily corpus linguistic work, regarding such issues as reliability of annotation, availability of meta data, data integrity and representativeness and practical limitations of typical query engines. Much of the workshop will be hands-on examples and exercises, and we will introduce practical solutions and workarounds for a number of frequently encountered problems. For maximal benefit, participants should bring their own laptop computer.

Roland Schäfer and Felix Bildhauer have been involved in building corpora from the web since 2011. They have created some of the world's largest web corpora for a variety of languages, including German.

InterCorp: Exploring a Multilingual Parallel Corpus

Alexandr Rosen (Prag)

After a brief introduction of parallel corpora, focusing on their specifics in comparison to standard monolingual corpora, and an overview of those publicly available, we take a closer look at InterCorp, a part of the Czech National Corpus. InterCorp has been on-line since 2008, growing steadily to its present size of 1.7 billion words in 40 languages, with a focus on Czech, but also a substantial share of English, Spanish, German, French, Croatian, Polish, Dutch and a number of other languages. Its core part includes mainly fiction, complemented by legal and journalistic texts, parliament proceedings and film subtitles. The texts are sentence-aligned, tagged (in 23 languages) and lemmatized (in 20 languages). In the practical, hands-on part of the tutorial, we learn how to:

- Select languages and texts

- Make a query including forms, lemmas and tags

- Specify view options, sorting, sampling, filtering, saving results

- Build customized subcorpora

- Use frequency statistics, identify collocations

- Ask for translations of a lexeme, based on the corpus or its part

Finally, we will discuss some challenges and prospects of the on-going project. Experience with the use of corpus search tools will be useful, as well as the registration as a user of the Czech National Corpus.

Präsentation als PDF-Datei

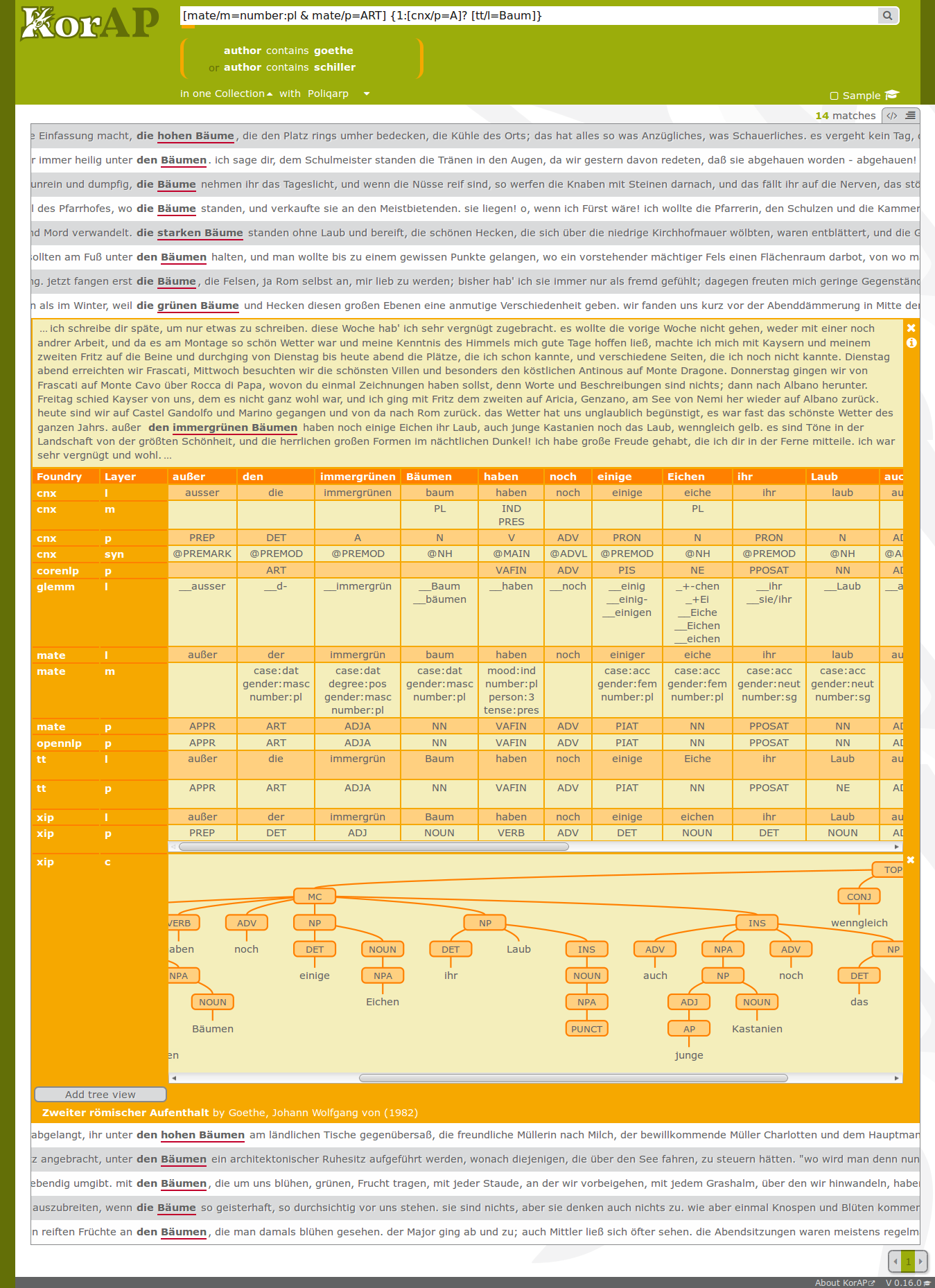

Introduction to Corpus Analysis with KorAP

Nils Diewald und Eliza Margaretha (Mannheim)

In recent years, due to technical advances and accessibility of resources through the world wide web, the field of corpus analysis gained new attention in providing tools to deal with very large corpora. DeReKo, the German Reference Corpus, for example, has grown beyond 25 billion words alone (Kupietz and Lüngen, 2014). Additional layers of linguistic annotations increase these amounts of data even further, pushing popular applications for corpus analysis like IMS Corpus Workbench (Evert and Hardie, 2011), Annis (Zeldes et al., 2009) or COSMAS II (Bodmer, 1996) to their limits.

KorAP is a web-based corpus analysis platform, developed with a focus on scalability, flexibility, and sustainability - and with the intention to replace COSMAS II as the main access point to DeReKo in the future. KorAP is capable of dealing with very large, multiple annotated, and heterogeneously licensed text collections. It supports researchers by providing a wide range of query constructs and the ad-hoc creation of virtual corpora. In this tutorial, the developers will introduce KorAP for corpus analysis. Starting with a brief description of the current state of development and the architecture of the system, the participants will be able to do their own research using KorAP in a hands-on session.

Following a short starting guide, all participants will be able to search for linguistic phenomena using KorAP, starting with simple sequences of words up to complex linguistic structures across multiple annotation layers. They will also be able to construct complex virtual corpora by means of meta data constraints, and make use of the built-in assisting tools. As KorAP supports multiple query languages like COSMAS II, ANNIS QL (Rosenfeld, 2010), or Poliqarp (Przepiórkowski et al., 2004; a variant of the popular CQP language), users known to these languages will easily be able to work with the new system. However, previous knowledge of corpus analysis platforms or corpus query languages is not necessary. To close the session, the developers would like to gather feedback on the current version of the software and discuss further improvements. For those interested in technical details of the KorAP system, the developers are open for questions afterwards.

The tutorial welcomes anyone interested in corpus analysis and corpus analysis software. Participants are requested to bring their own laptops for use in the hands-on session. A common browser in a current version should be pre-installed (e.g. Mozilla Firefox, Google Chrome).

Literature

- Bodmer, Franck (1996): Aspekte der Abfragekomponente von COSMAS II. LDV-INFO, 8:142‚ pp. 112-122.

- Evert, Stefan / Hardie, Andrew (2011): Twenty-first century corpus workbench: Updating a query architecture for the new millennium.

In: Proceedings of the Corpus Linguistics 2011 Conference, Birmingham, UK.

- Kupietz, Marc / Lüngen, Harald (2014): Recent Developments in DeReKo. In Calzolari, Nicoletta et al. (eds.):

Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC'14). Reykjavik: ELRA, 2378-2385.

- Przepiórkowski, Adam / Krynicki, Zygmunt / Dębowski, Łukasz / Woliński, Marcin / Janus, Daniel /Bański, Piotr (2004):

A search tool for corpora with positional tagsets and ambiguities. In: Proceedings of the Fourth International Conference on

Language Resources and Evaluation (LREC 2004).

- Rosenfeld, Viktor (2010): An implementation of the Annis 2 query language. Technical report, Humboldt-Universität zu Berlin.

- Zeldes, Amir / Ritz, Julia / Lüdeling, Anke / Chiarcos, Christian (2009): ANNIS: A Search Tool for Multi-Layer Annotated Corpora. In: Proceedings of Corpus Linguistics 2009, Liverpool, UK.

Visualisierung linguistischer Daten mit der freien Grafik- und Statistikumgebung R

Sandra Hansen-Morath und Sascha Wolfer (Mannheim)

R ist eine flexible und freie Entwicklungsumgebung zur Umsetzung von statistischen Analysen, die zahlreiche Optionen zur Datenvisualisierung bereit hält und sehr gut für große Datensätze geeignet ist. Unser Workshop vermittelt einen stark anwendungsorientierten Einstieg in das Programm und legt mit Hilfe von vielen praktischen Übungen und linguistischen Anwendungsbeispielen die Grundlagen für ein eigenständiges Weiterentwickeln der eigenen Fähigkeiten im Umgang mit der Software. Wir werden elementare explorative Visualisierungen vorstellen und in die Logik des Basis-Grafiksystems von R einführen. Darüber hinaus werden wir inferenzstatistische und multivariate Statistiken vorstellen und zeigen, wie man die Ergebnisse dieser Verfahren visuell darstellen kann. Wir werden außerdem vorstellen, wie in R interaktive Grafiken erstellt werden können.

Aktuelle Informationen

Termine und Fristen

31.05.2016: Einsendeschluss für Abstracts- 17.06.2016: Verlängerter Einsendeschluss

- 15.07.2016: Mitteilung über die Annahme

- 08.11.2016: Tutoriumstag

- 09.-11.11.2016: Konferenz

Keynotes

- Anne Abeillé (Université Paris Diderot - Paris 7, Frankreich)

- Susan Conrad (Portland State University, USA)

- Anke Holler (University of Göttingen, Deutschland)

- John Nerbonne

(Rijksuniversiteit Groningen, Holland / Universität Freiburg, Deutschland) - Alexandr Rosen (Karls-Universität Prag, Tschechische Republik)

Konferenzposter

Bisherige Konferenzen

- Grammar and Corpora 2005 in Prag (Tschechische Republik)

- Grammar and Corpora 2007 in Liblice (Tschechische Republik)

- Grammar and Corpora 2009 in Mannheim (Deutschland)

- Grammar and Corpora 2012 in Prag (Tschechische Republik)

- Grammar and Corpora 2014 in Warschau (Polen)